Quick Tip: Add accurate motion blur to your warps.

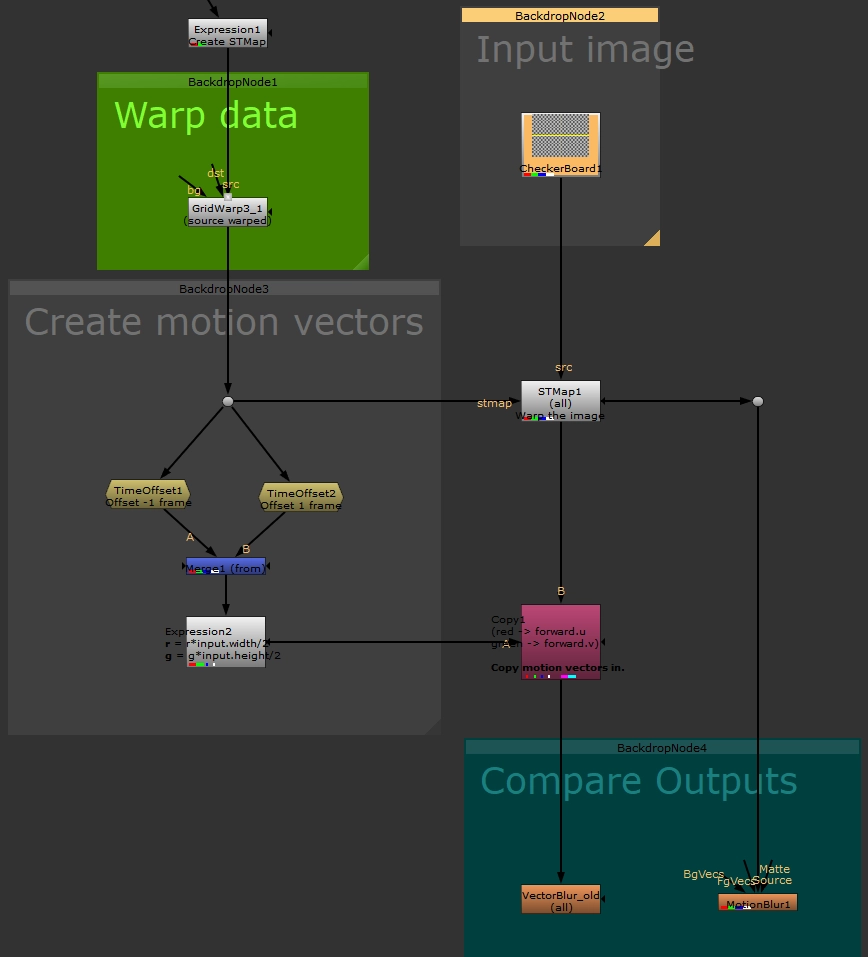

When warping, I always use ST Maps as the base, as they provide so much extra control. Nuke's built-in "MotionBlur" node, RSMB, and other nodes are available to generate motion blur from our warped input images, however, these nodes are generating new motion vectors, essentially making their best guess at where the pixels are travelling. Wouldn't it be better to use our warp data to drive this instead?

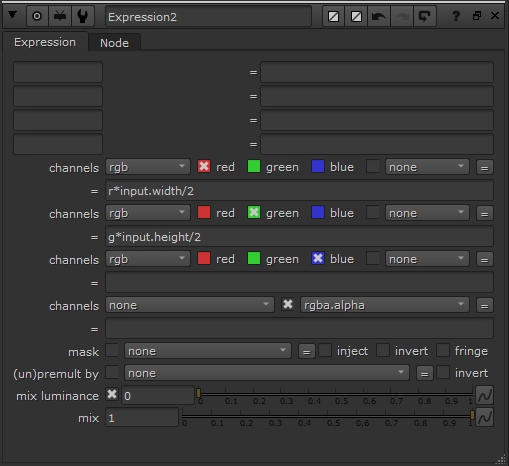

When we use ST Maps for our warps, we already have the pixel-transformation data we need for motion blur, and just need to convert it into motion vectors. Using the above setup (which you can download here), we can calculate the motion by finding the difference between the previous and next frames, and modifying the pixel values with this expression:

We can then apply motion blur using these motion vectors by copying them into our B-pipe, and using a VectorBlur node.

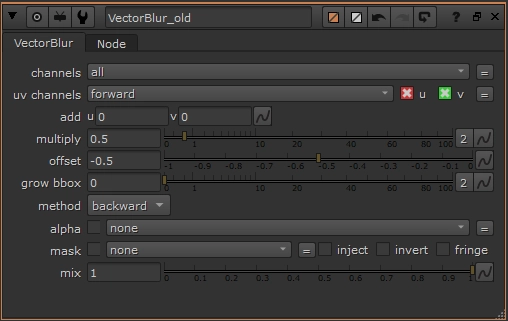

You might have noticed in the image of my node graph that I'm using the old VectorBlur node. I prefer it as it gives more predictable results. You can easily access it by hitting X with your cursor over the node graph, and typing "VectorBlur".

In the old VectorBlur node, I'm setting uv channels to forward, which is where I copied my previously-created motion vectors. I'm also setting multiply to 0.5.

0.5 is the magic number as we're calculating our motion vectors by finding the difference between the frame before and the frame after -- a difference of two frames = double the blur. We're compensating by only adding "half" the blur.

This will give us the same result as setting any node's shutter offset knob to centered, and the shutter to 0.5.